-

Mapping Cicero

Mapping Social Life Through Cicero’s Letters

As I’ve mentioned before on Twitter, I’m interested in using the visualization tool Gephi to explore social relationships in Cicero’s letters:

Gathering up metadata on Cicero's letters. Plan to import it into Gephi and analyze epistolary correspondence as a sort of 'social network'. Github repo: https://t.co/LbfPcBX6Dl #ancmakers pic.twitter.com/LAD0gkWIMd

— Ryan Pasco (@rympasco) January 14, 2018Now that summer is here, I am excited to devote greater attention to this project. Along the way, I’ll be posting brief updates here about the challenges I face and some of my decisions in structuring the project.

The benefits of this are two-fold. First, I believe that doing scholarly work in public view – however anxiety-inducing – is a great way to get early and valuable feedback, especially in a project like this that requires proficiencies in many different areas. Second, part of our goal with ergaleia is to emphasize the process of digital projects in addition to their – often exciting! – end results. We at ergaleia have encountered plenty of faculty members that are excited by the potential of new digital tools, but who don’t have a good sense of what digital research looks like in practice. In part, ergaleia is aimed at them. Digital scholarship done in the open, we hope, will make what seems unapproachable approachable.

With that in mind, I’d like to give a brief introduction to what I aim to achieve in the project, how I came to it, and the software I’m using. This is all quite cursory, and will all be followed up in greater depth in future posts as I get further along.

Goals

My goal is to make an interactive ‘web’ of individuals represented in Cicero’s letters (addressors, addressees, and those named or alluded to within the bodies of the letters themselves) and their relationships with each other as the letters describe them. Each relationship will be dated to allow the user to watch social relationships as they shift over time; further, the relationships will have a number of attributes assigned to them, such as letter number, letter type, topic of letter, social status/ethnic origins of addressee, etc., that will allow the user to filter through the data and intuitively explore specific questions. For example: does the portrait of social life meaningfully differ in letters on philosophical vs. political topics? If so, how? The end-product will be freely available and, I hope, useful for those asking questions of Cicero’s letter collection and also as a pedagogical aid for those teaching about the late Republic.

I am not, of course, the first person to look into the idea of mapping social networks in ancient epistolography. Benjamin Hicks at Princeton is working on mapping Pliny’s letters. More relevant to the project at hand, Caitlin Marley of University of Iowa recently defended her dissertation “Sentiments, Networks, Literary Biography: Towards a Mesoanalysis of Cicero’s Corpus”, in which she examines the emotional plot of Cicero’s orations using sentiment analysis, a way of computationally determining the postive/negative emotion of a text or piece of text by tracking the use of positive and negative words throughout. In her project, she also uses Cicero’s letters to create a social network and examines the positivity or negativity of correspondence between individuals:

To everyone who expressed interest in the idea, look forward the work of Caitlin Marley @CaitlinAMarley at U of Iowa, who is wrapping up a diss. on this very subject! #ancmakers https://t.co/mErXCDzszM

— Ryan Pasco (@rympasco) January 14, 2018By the end of the project, though, I suspect I’ll have ventured pretty far in my own direction, as any attempt to render something as messy as social relationships into a rigid scheme will be pretty different from other attempts and will respond to different obstacles by different means. In part, I make my own attempt in order to better familiarize myself with Gephi and social network analysis in general; in later work, I’d like to use social network analysis to examine competition & allusion in the comic fragments, and this project will be a great learning experience.

Why This Project?

My interest in the project began in my first semester of graduate school when I took a seminar on Latin epistolography. It was my first meaningful look at Cicero’s letters; in the process, I became fascinated with one figure, the Etruscan Aulus Caecina, with whom Cicero corresponds (Ad Fam. 6.5-8). Over a decade after his own exile, Cicero exchanges letters in 46BCE with Caecina, himself exiled for writing a tract against Caesar.

The letters capture the social positions of Aulus Caecina and Cicero in a brief span of time. Caecina the exile seeks Caesar’s forgiveness through Cicero as intermediary, gets updates on his son at Rome, and writes a praise-work of Caesar (with Cicero as editor); Cicero gathers information on Caesar’s disposition towards Caecina (through Largus, a friend of the Etruscan), communicates with the absent Caesar’s associates Balbus and Oppius, and recommends Caecina to T. Furfanius Postumus and his legates. Without these few letters, we would know little about Caecina or about his relationship with elite Romans. As Seneca writes of Cicero’s correspondence with Atticus:

Nomen Attici perire Ciceronis epistulae non sinunt.

“Cicero’s letters do not let the name of Atticus perish”.

Perhaps an overstatement in the case of Atticus, but the spirit of the utterance stands: so much of our knolwedge of the period hangs upon this correspondence. My fascination with the letters of Cicero and Caecina, which comprise only a small portion of the ad Familiares, only a small part of the picture of social life captured in the letter collection, gave way to an interest in social relationships in the letters of Cicero more broadly. How detailed of a web of social life can we really draw from Cicero’s letters? How do the many minor figures that Cicero mentions or writes about fit in? How do social relations change in conjunction with major political events? How might the web of relationships change based on the type or topic of letter or the addressee? How could I begin to collect and sort through so much data? Though interested in these questions, I didn’t know the best way to approach them, given the intimidating size of Cicero’s collected letters.

Gephi

It wasn’t until I attended HILT in 2017 that I learned of a possible approach. A fellow participant introduced me to a mapping project of Carolingian intellectual networks by Clare Woods that uses the program Gephi. With Gephi, a user can easily create and manipulate visualizations that help to intuitively explore and discover patterns in data.

I will touch on different features of Gephi in subsequent posts as they come up, but for now a brief explanation is in order. Gephi graphs networks made up of actors in a network (called “nodes”). Nodes in the network can be assigned labels – in the case of Cicero’s correspondence, the name of the individual the node represents – as well as other attributes, like gender, social class, philosophical assocations, etc. The relationship between these nodes are called “edges”; these too can be assigned attributes, such as the type of relationship (addressor-addressee, the genre or topic of letter it takes place in, etc.). These attributes can be used to filter the graph: for example, I may be interested in displaying only equestrians with at least three connections to other individuals in the network.

These nodes and edges can be changed to communciate different parts of the data: the centrality of a node to the network, i.e. how close it is to other nodes, might be determine its size in the visualization, for example. The completed network can be arranged with a number of layouts that arrange nodes based on specific criteria. I’ll write more about this as it comes up, but for now this tutorial has some valuable explanations.

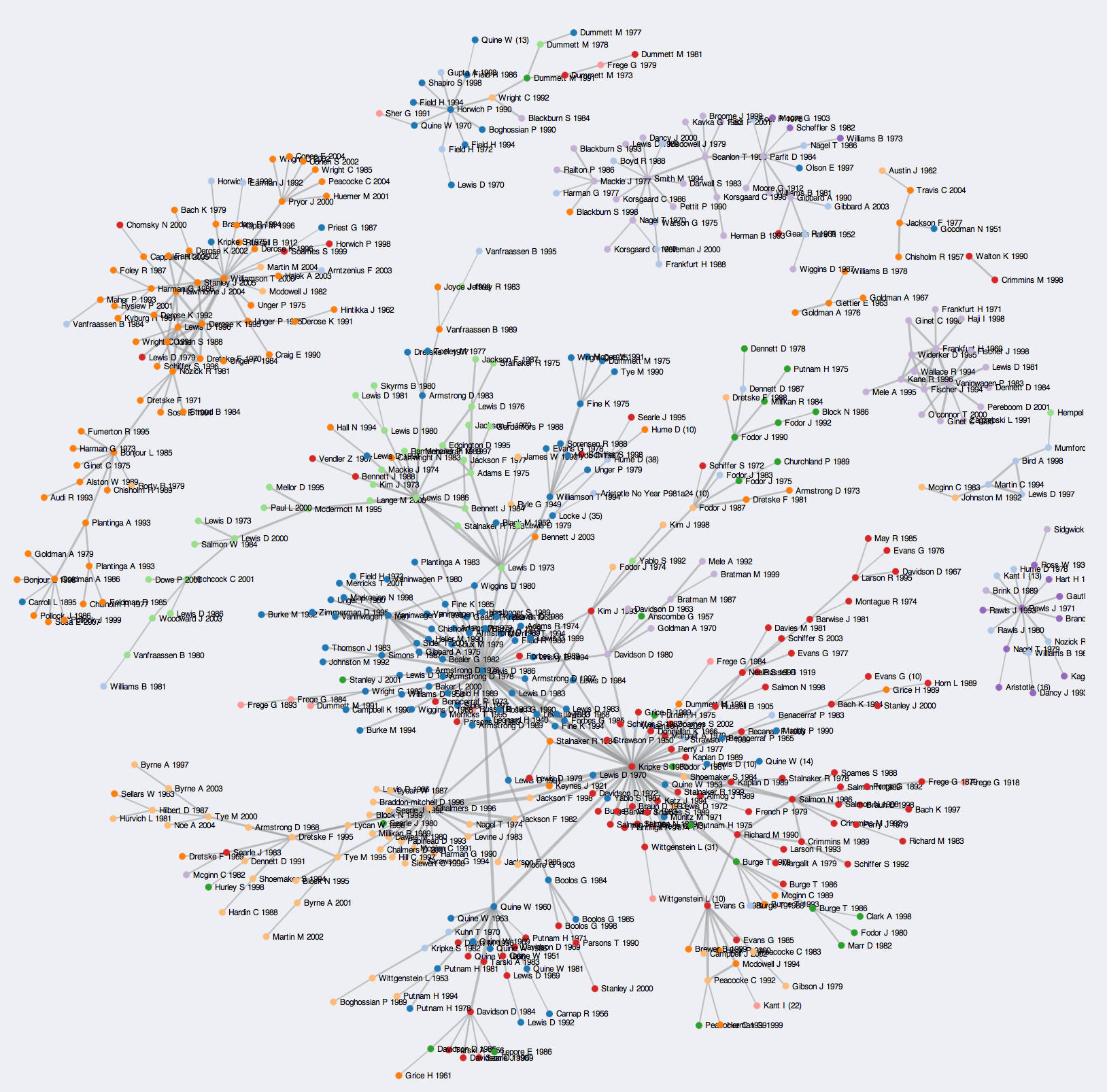

Though not made in Gephi specifically, the Co-Citation Network for Philosophy by Kiernan Healy at Duke is an instructive example:

The network is composed of co-citations of items (if both items are cited in the same book or article, they have a relation) in four philosophy journals from 1993 to 2013. An algorithm arranges the network into ‘communities’ of interrelated co-citations, which are color-coded based on the community assignments. The resulting visualization – which you can interact with here – captures the contours of academic debate and, as Healy notes, subdisciplinary topics. Healy’s network is based upon Neal Caren’s Sociology Citation Network done with Python/NetworkX/D3.js.

This is all to provide you a brief glimpse into how social network analysis might look. Before I begin visualizations, though, I need data to visualize and to develop my methodology for gathering this data in the first place. In my next post, I will begin to tackle fundamental issues towards turning messy text into data: what will be my criteria for determining if two individuals mentioned in a letter have a relationship? How do I approach letters that are addressed to/refer to groups of individuals like the Senate? How will I classify types of relationships? For example how do I distinguish the relationships between the addressor and the addressee of a letter (dear Cicero) vs. addressor and an indirect recipient of a message (dear Cicero, tell Caesar that…) vs. individuals referred to at a distance (dear Cicero, I heard that Balbus and Oppius…)?

Until then, stay tuned for updates.

-

Digital Journey

My Journey into the World of Digital Tools for Classics

TOC:

Intro

I’ve always considered myself a techie. I’ve been using a personal laptop since I was a sophomore in high school; I was the first in my friend group to have an iPhone; and Whitaker’s Words, Perseus, and Peter Heslin’s Diogenes have been helping me with my translation assignments since I was a freshman at the College of the Holy Cross. Those last elements of my tricolon crescens were, for a while, the extent of my digital engagement with Classics, but looking back, I can trace the evolution of my interest in digital tools for Classics to three major events.

Homer in Fall 2008

The first occurred in the Fall of 2008 when I was a junior at Holy Cross. I was taking an author-level seminar on Homer with Mary Ebbott, who is now known as one of the two Editors of the Homer Multitext Project (hereafter HMT), along with Casey Dué Hackney. At the time, the HMT was in a nascent stage. It was born out of a meeting in 2000 and advanced slowly for years, with various attempts at a reconstruction of the text of the Venetus A and other manuscripts with the involvement of graduate and undergraduate students. A watershed moment came in May 2007, when the editors of the HMT acquired digital images of the three Venice manuscripts, and the work towards what we can find now on the HMT website began.

At the time, I knew none of this backstory (and in fact, I still didn’t know much of it until last week, when I e-mailed Mary Ebbott to get some context for this blog post). All I knew was that our final project for the semester was unlike anything that my peers and I had ever done in a seminar before. Thankfully, Professor Ebbott has saved all of her teaching files since she began at Holy Cross, so she provided me with the actual description of the project and its four parts as we received it almost a decade ago. It was divided into two major parts, one dealing with the text of a section of Book 6 of the Iliad and a second dealing with its scholia.

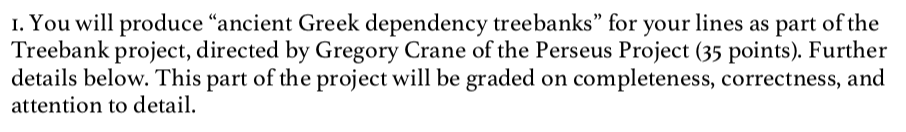

Treebanking

The idea of the dependency treebank was to annotate every sentence in our 40-ish line chunk of text in terms of main verbs, subjects, adjectives and which words they modify, participles and their relationship to a word in the sentence or the action of the sentence as a whole, and so on. This was an absolute dream of an assignment for a grammar nerd like me. What drew me into Classics in the first place was the languages; the inflections and paradigm charts and syntactic relationships really speak to the way that my mind works. I sometimes feel like I’d be just at home in a field like math or physics in that regard.

The entry process was intuitive. For any given word in a sentence, dropdown menus allowed us to select the proper lemma (dictionary headword) for any given word with possible parsings (e.g., 1st sg. aor. act. ind. for ἔλυσα from the lemma λύω); we could also type in the proper lemma and parsing if Perseus’ info was incorrect. Given that this was almost a decade ago, I can’t find any screenshots of the treebanking interface as it was back then, but Arethusa, the treebanking interface’s latest iteration from Alpheios, lets you create your own treebanks and annotations. (See below for a screenshot of Arethusa.)

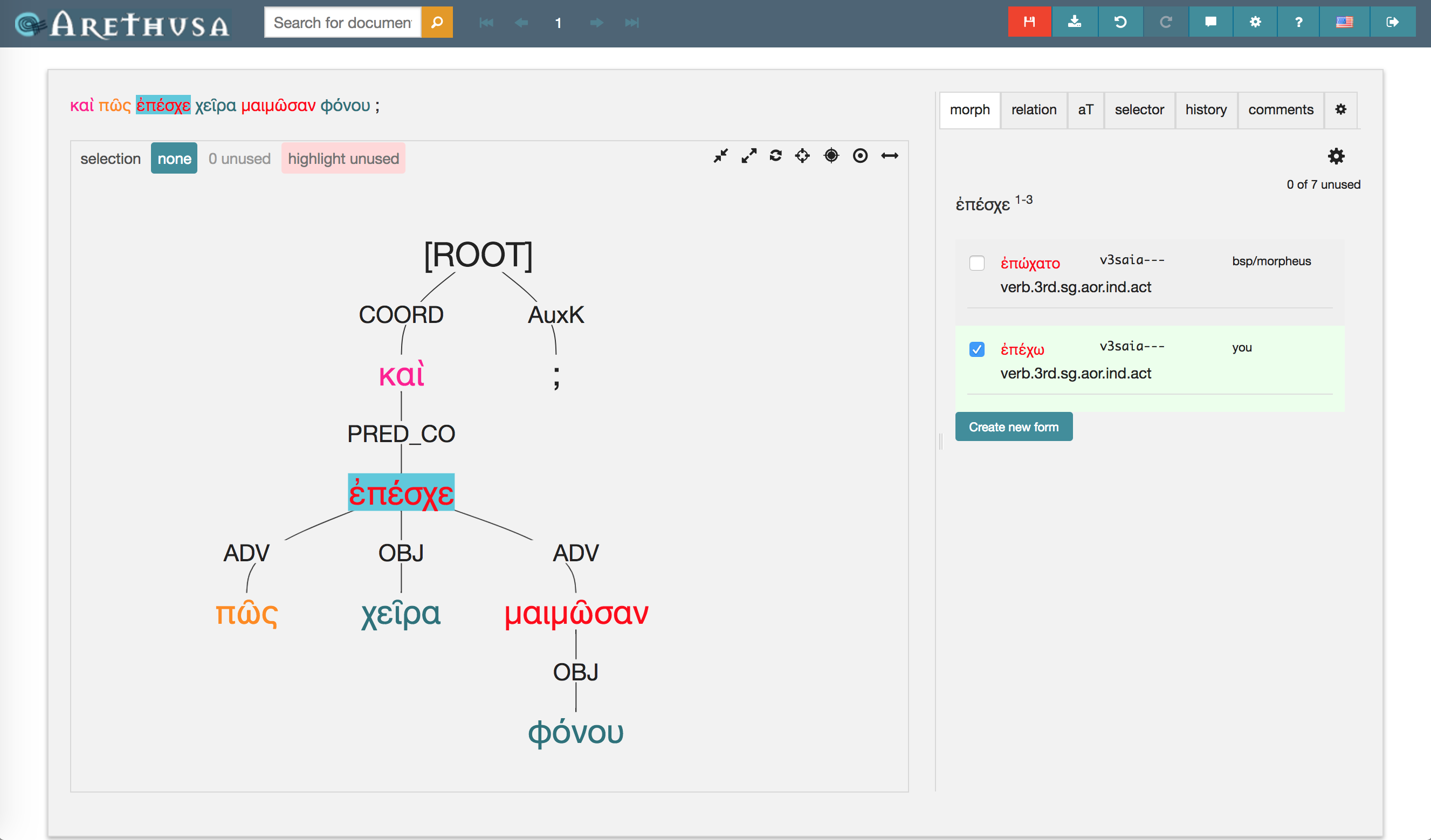

This was/is close reading in its most fundamental form. Every word has to be accounted for, including all the little γε’s and μέν’s, and you have to make decisions about the relationships of words in a sentence, especially when there are a number of interpretations. I found participles particularly challenging. Properly, a participle is an adjectival form of a verb that syntactically modifies a noun, but its translation serves either only to modify that noun (in an attributive relationship, which would require me to tag the participle “ATR”) or qualify the circumstances under which the main verb of the sentence is being performed (in a more adverbial sense, which would require me to tag the participle “ADV” and attach it to the main verb rather than the noun that it modifies; further, if the participle is necessary to complete the sense of the main verb, I’d tag it “OBJ” rather than the optional “ADV”). Let’s take a look at a sentence from Sophocles’ Ajax as an example:

καὶ πῶς ἐπέσχε χεῖρα μαιμῶσαν φόνου; (Soph. Aj. 50)

“And how did he stay his hand when it was eager for slaughter?”The participle μαιμῶσαν syntactically modifies the noun χεῖρα. Both are feminine accusative singular. Easy enough. But if I were to tag μαιμῶσαν as ATR, an adjective modifying χεῖρα, and attach it to χεῖρα, the implication of the tag and relationship would result in a translation like “How did he stay the hand which was eager for slaughter?” The force of the participle is limited to modifying that hand as opposed to any other hand. A better interpretation, I think, would be to take the participle as describing the circumstances in which he would stop his hand, i.e., “when (or) although it was eager for slaughter.” I’ve used Arethusa to generate my treebank of the sentence, which would look something like this:

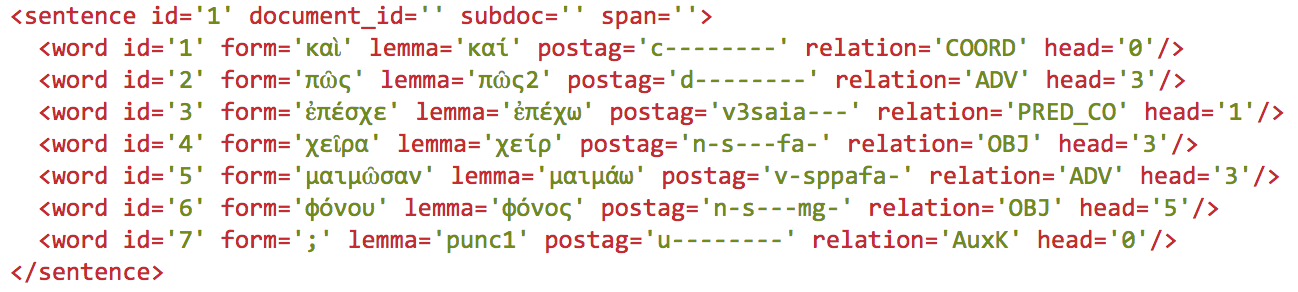

I confirmed the lemmata and the parsings through the “morph” section to the right and used dropdown menus in the “relation” section to tag each word. This treebank is generated by XML which is created from my choices and looks something like this:

Note how μαιμῶσαν attaches to ἐπέσχε rather than χεῖρα. It’s a value judgment for sure, but it’s one that forces you to make an interpretation based on the evidence in front of you. And this is the process that you have to go through for every word in the text.

This first instance of treebanking led to a year or so as a treebanker for Perseus. The process involved multiple treebankers working on the same text and a third person, a reconciler, managing differences and producing the final annotation. I read some texts that I wouldn’t have read otherwise, including odes of Pindar and Hesiod’s Shield, and I also had the opportunity to annotate an entire text on my own, Sophocles’ Ajax, the XML for which you can find here. It’s pretty nifty to see my name on a digital publication of sorts! Even if now that I’m looking at it with fresh eyes, I’m already seeing mistakes in the first few sentences …

Scholia

The second major part of the assignment was the part that was quite unfamiliar territory for all of us students in the seminar, except for maybe one or two. We had enough trouble reading and translating Iliadic Greek from our carefully prepared critical editions in modern type, let alone from an image of a manuscript. It was quite an interesting exercise, learning what the manuscript’s abbreviations meant and translating them essentially from scratch, as there was no commentary or existing translation to help us on our way.

I’ll admit that I don’t remember much about the actual outcome of my transcription, translation, and commentary, but the use of digital technology, namely digitized images and the ability to zoom, facilitated the task greatly. Digital tools for Classics don’t have to entail coding or complex algorithms or crazy detailed knowledge of computer science; they include websites and jpg’s and Twitter and other things that we access every day that create for us different modes of experience and engagement than a book or physical photograph would – not necessarily better (and, arguably, sometimes worse), just different.

The other important takeaway from the experience, now that I look back on it in hindsight, was the ability as an undergraduate to contribute to original and groundbreaking research. My name and the names of my classmates are credited on the Homer Multitext website. I didn’t know as an undergraduate junior what the Homer Multitext Project would become or how our work on the scholia would be used (I’m not sure that anyone did at the time), but to have been part of a project that helps us understand the Homeric poems in novel ways is immensely cool.

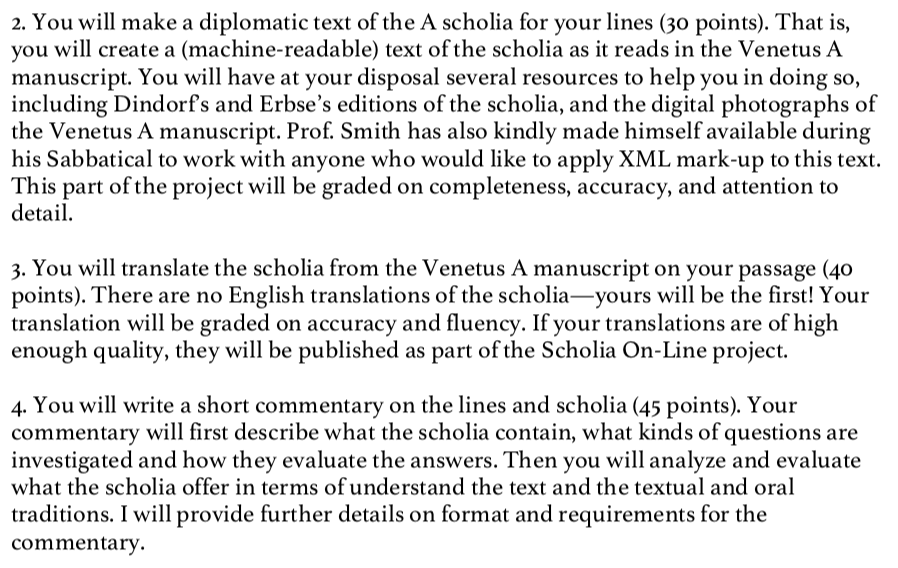

Now that I’m entering academia as a teacher in my own right, I’m actively thinking of ways to engage undergraduate students in Classics research in ways similar to my experiences in the Homer seminar and as a Perseus treebanker. The development of digital tools and corpora is an immensely fruitful way to do so, as some of the tools profiled here on ergaleia attest. See, for example, the Zenobia exhibit created by Brandi Mauldin at UNC Greensboro. She used her research on the warrior queen Zenobia to contribute material to the WIRE (Women in the Roman [Near] East) database of ancient artifacts and create an easily accessible exhibit that traces the life and career of Zenobia through material evidence. This is public-facing scholarship with benefits beyond the completion of a semester-long project, inasmuch as Brandi’s contributions to the WIRE Project make the information available to anyone else who wants to use it.

Another example comes from my alma mater, Holy Cross. Shortly after I graduated (of course – isn’t that always how these things work?), Holy Cross opened up its summer research program to the humanities, and because of our work in the Homer seminar, the department knew that Classics students were prime candidates for summer funding for original research and projects. The success of Classics majors’ participation in the summer research program led to the creation of the HCMID, the Holy Cross Manuscripts, Inscriptions, and Documents Club, a student organization devoted to the study of incompletely published primary sources. Various factions of the group have been presenting at professional conferences around the world, some members have been published (as undergraduates!!), and some of the projects on which they are working are unbelievably innovative and make me wish I’d been born even a few years later so that I could have capitalized on the existence of HCMID as an undergraduate student. The projects include ongoing work on the Homer Multitext, the digitization of Latin liturgical manuscripts with musical notations called neumes (click through the link to check out the project website with examples of things you can do with their work!), and the digital tagging of place and people names in manuscripts of Pliny.

As Mary Ebbott writes to me in an e-mail,

Doing the projects in the seminar really showed us how undergraduates could be involved and contribute. … When the summer research program at HC was extended to the humanities, we knew that we could do this work with undergrads because of the success of it in the seminar. The success of the summer research led to (1) the MID club at HC and (2) starting HMT undergraduate summer seminars at the Center for Hellenic Studies (we had done it in 2005 and 2007 with graduate students). So you all were trailblazers for the project. If you had failed, who knows what would have happened 😉.

How cool is that? 😎

Python Course

The second major stop on my journey towards embracing digital tools for Classics occurred almost a decade after the Homer seminar. In the meantime, I graduated Holy Cross, earned my Masters at NYU, and began my Ph.D. at Boston University. In my last year in the BU program, I started becoming good friends with a rising second year in the program, a certain Ryan Pasco, whose name I hope will be familiar as the co-consul of ergaleia.

He mentioned that over the summer, he had spent a week in Texas at a course aimed at teaching humanities scholars how to code. The course, entitled “Help! I’m a Humanist! - Humanities Programming with Python,” is part of Humanities Intensive Learning and Teaching (HILT), an annual digital humanities conference geared towards researchers, students, early career scholars, and cultural heritage professionals who want to learn more about applying digital techniques to their work. This year’s HILT (with the same Python course, among others) takes place this year in Philadelphia.

Ryan and I got to talking about the types of things that he learned, including working with text as data, and he mentioned that some of the scholarly areas in which I was interested, including tracing shifts in focalization throughout a large text like Ovid’s Metamorphoses, were totally viable via coding.

This was me upon hearing that:

The opportunity to participate in HILT was almost 10 months away, however, so I searched online to see if there was anything that I could use to start teaching myself how to code.

This was me upon looking for a place to start:

There are literally dozens, if not hundreds, of viable coding languages and lots of different ways to go about working towards my ultimate goal, which was to use a computer to analyze textual data in an efficient way. I floundered for a bit until I came across this blog post, “An Affable Guide to Leaving Classics”, by Kyle Johnson, which made a big impression on me in lots of ways, including through this paragraph that I ended up Tweeting about:

Particularly heartened by this (hashtag Python adventures): pic.twitter.com/HfujrAJ9nl

— Daniel Libatique (@DLibatique10) September 5, 2017So, Python it was. Luckily, there are many resources available online to learn Python. I’d hardly say I’m proficient in Python now, but three resources in particular helped me immensely in gaining a little bit of Pythonic literacy. First was a course through udemy.com created by Jose Portilla entitled “Complete Python Bootcamp: Go from Zero to Hero in Python”:

The course was incredibly thorough, starting from the basic concepts of Python and different object types like strings, lists, and dictionaries and methods and functions that one can use on each towards function definitions, lambda expressions, and object oriented programming. I still go back to this course often to check on certain methods and code examples, and it’s an amazing resource.

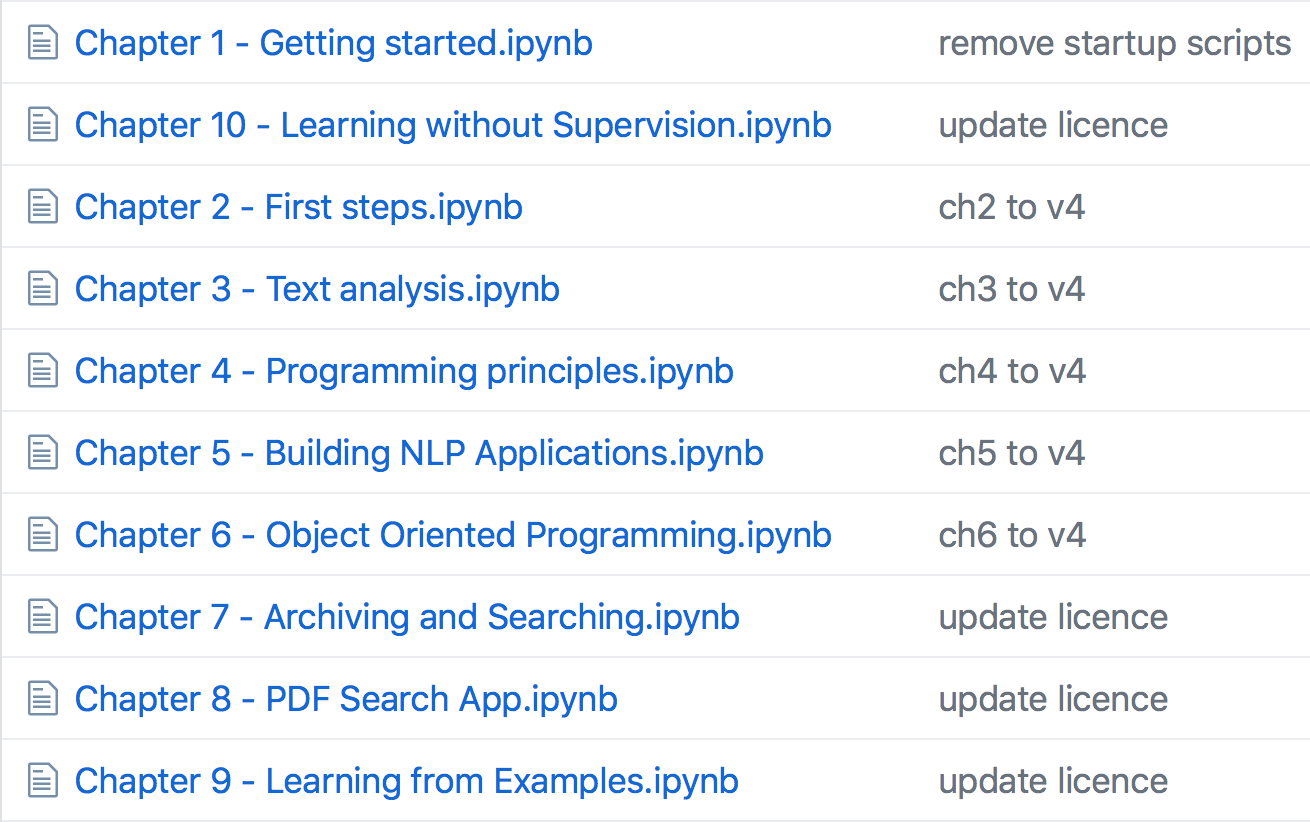

So, learning Python was great through this Udemy course, but how could I apply what I was learning to my Classical interests? Luckily around this time, Patrick Burns was team-teaching a course at ISAW-NYU entitled, appropriately enough, “Introduction to Digital Humanities for the Ancient World” (syllabus linked here). The schedule for the course included a series of very helpful Jupyter notebooks created by Folgert Karsdorp, Maarten van Gompel, and Matt Munson on using Python in a humanities context:

These notebooks built on the knowledge that I had gained through the Udemy course and helped me understand how to work with textual data.

A third resource, and in my opinion the holy grail for Python in Classical contexts, is CLTK, the Classical Language Toolkit. It’s a toolset that you can use to work with texts in many classical langauges, with ancient Greek and Latin currently the most complete. It includes code, textual corpora, detailed documentation on how to use CLTK code, and tutorials in the form of Jupyter notebooks that help you get started with CLTK in your own research. CLTK’s code for Greek and Latin includes many tools for analysis and textual transformation, including lemmatizers (code that returns the dictionary headword for any inflected word), tools for Greek accentuation, part-of-speech tagging, tokenizers (splitting a text into analyzable chunks, like words or sentences), even poetic scansion.

I also have to shout out part of CLTK’s leadership, Pat Burns, as my sort of inadvertent coding-for-Classics mentor. We met when he was still a grad student at Fordham when he came to BU to present on Lucan at the graduate conference that I co-organized in 2015. We stayed in touch on Twitter since then, and seeing him combine his work experience as a programmer with his Classical interests helped me realize the potential for digital tools like coding. On a personal note, he’s been extremely helpful in looking at and suggesting edits on snippets of my code, and his familiarity with CLTK helped me navigate its structure (and see all the dumb mistakes I make as I try to make use of CLTK’s corpora and code).

So, with the combination of Udemy, the ISAW syllabus, and CLTK, I’m feeling a bit more confident as a coder and seeing the potential for textual analysis on a scale that working with physical OCTs or Loebs would render an extremely long and complicated process. Of course, digital tools like Python are not a miraculous panacea that answers every question that a Classicist can ask of their sources; they are aides that make the process of analysis and research more efficient and perhaps more broad than the Classicist originally envisioned.

OGL and SCS/AIA 2018

The last major stop on this digital journey was quite recent: this year’s SCS/AIA Annual Meeting in Boston and the Open Greek and Latin workshop that took place at Tufts University the day before. Since I’ve written on it at length in two blog posts (part one here, and part two here), I won’t rehash the details, but simply reinforce the conclusions that the OGL workshop and the SCS Annual Meeting helped me reach.

Digital tools allow for interpersonal connections beyond the confines of physical space, whether at a particular academic institution or at a conference. Communication with someone across the country or indeed across the world is now a matter of a few keystrokes and the click of a mouse or trackpad. Twitter, among other social media platforms, connects scholars and students in ways unheard of even three or four years ago.

The academic life can be a quite lonely one, perpetuated in no small part by the paradigm of the sole scholar going at it alone, coming up with original and innovative ideas and attaching their name alone to the end product. In an age where communication and collaboration are facilitated by digital tools, that no longer needs to be the paradigm, and the digital humanities sect of Classics is a perfect case in point. So many great projects that have real benefits for the Classics community at large are the result of collaboration, including the ones that I’ve mentioned in this blog post like the Homer Multitext and CLTK. Digital folks also tend to be extremely generous with sharing resources and help, as I’ve learned firsthand through quick and helpful responses to my countless “what am I doing wrong with this code” and “does anyone have any experience with __” tweets. The guiding principle of “strength in numbers” has the potential to create a product (whether digital or otherwise) stronger than one created by a lone person.

In Conclusion

My journey towards embracing digital tools for Classics has had some interesting stops along the way, from a seminar at Holy Cross to the desk in my apartment with Udemy up on my laptop and a glass of red wine next to it to Tufts University and the SCS/AIA 2018 venue in Boston. My graduate program at BU trained me well in the tenets of Classical scholarship, in terms of philological analysis and the incorporation of primary and secondary sources into a novel argument, but this parallel journey of sorts will undoubtedly serve to benefit my scholarly inquiries and pursuits.

I wanted in this blog post to give a brief (oops) explanation of my own personal journey and to share some of the resources that I used to learn. I hope that this reflection causes you, the reader, to think about how digital tools have impacted or can support your scholarly pursuits. Perhaps the digital tools that we profile here on ergaleia can give you new insights into your material or argument, but these are just a minor subset of all the digital tools out there. Our goal is to explain a tool’s uses and, most importantly, its utility in pedagogical and research contexts; feel free to browse our resources section and let us know if there’s a tool that you’d like us or a guest blogger to profile!

-

Presentation

Proseminar: Digital Tools for Classicists (February 2018)

At the 2018 AIA/SCS Annual Meeting in Boston, there was a day-long workshop entitled Ancient MakerSpaces (#AncMakers), organized by Patrick Burns of ISAW (@diyclassics). The workshop was, as described by Pat in a tweet, “designed to introduce participants to and [get] them started with digital resources & methods in classics/archaeology/etc. as well as to showcase solid scholarship in this area.” There was also a pre-AIA/SCS day-long workshop at Tufts on the Open Greek and Latin project which also showcased a lot of interesting scholarship and digital tools. There were a number of awesome tools and reading/learning environments showcased each day to which we wanted to bring your attention for use in your own pedagogy or scholarship.

This list is by no means exhaustive, as there were many other projects and environments highlighted, including:

- the LACE OCR project (see Daniel’s Tweet thread here),

- the Ugarit Translation Aligner tool,

- the new and improved Logeion web and app interfaces (see Daniel’s Tweet thread here),

- the Homer Multitext Project, now coming up on a full first edition of the Venetus A,

- the new and improved Alpheios suite of reading tools,

- ToPan and Melete, topic modeling tools,

- OpenARCHEM, a repository for data for archaeometry (the study of archaeological material),

- and the suite of lexicographical tools at the Classical Language Toolkit.

Time precludes us from going into detail about these, but feel free to follow the links and ask us questions about them; if we don’t know the answers, we can point you towards those who do.

The tools that we are about to discuss are, we feel, useful for the types of scholarship and courses that BU Classics currently generates and offers. Some (Scaife, Hedera, QCrit) are not yet publicly available but will be soon. The other three (The Bridge, Pleaides/QGIS, and WIRE) are databases and resources available to use (and contribute to!) right now.

Each tool in the following list will be the subject of a more detailed write-up in forthcoming blog posts.

1. Scaife Viewer / Perseus 5.0

The Perseus Project needs no introduction, but James Tauber and his web development company Eldarion have been hired to create the next version, updating its decade plus old infrastructure and infusing it with exciting new tools. Among these tools are hotlinks to highlighted passages, lemmata search tools, morphology paradigm generation (e.g., all forms of a word found in a certain text). See Daniel’s Tweet thread from the Scaife presentation at the OGL Workshop at Tufts (1/3/18) here.

Powerful lemma search tool included. Word list generation. Intra and intertextual word frequencies. @rympasco just leaned over to me and whispered "Prose comp." #oglworkshop #aiascs pic.twitter.com/kBEYW1oJh3

— DLibatique10 (@DLibatique10) January 3, 20182. The Bridge

The Bridge under the direction of Bret Mulligan generates vocabulary lists for numerous Latin and Greek texts and textbooks. You can set filters, like chapter or line numbers, and parameters to display along with the definitions, like how often a word appears, when it first appears, what part of speech it is, etc. The goal is to support a student’s acquisition of necessary vocabulary by bridging what the student knows to what the student doesn’t know. See Daniel’s Tweet thread here.

The BRIDGE allows for customized vocabulary lists -- by work or works, textbooks, particular passages. But also allows user to exclude previously learned words. Would be amazing for PhD student prepping for translation exams. #aiascs #AncMakers

— Ryan Pasco (@rympasco) January 6, 20183. Hedera

Hedera analyzes a passage of Latin to determine its appropriateness for a student’s current reading and vocabulary level. It can output graphs / percentages showing how far a student needs to go in terms of vocabulary commitment and comprehension. Eventual goals include the generation of a passage’s lemmata and automatic recommendation of passages appropriate to reading level. See Daniel’s Tweet thread here.

Hedera will offer an adaptive Latin-learning experience; thrilled at the opportunity to use it in a classroom setting @TBihl https://t.co/PzY7Y9R8mF

— Ryan Pasco (@rympasco) January 6, 20184. WIRE: Women in the Roman East Project

WIRE is a database of material culture relating to the lived experiences of ancient women in the Roman Near East; so, you’ll find, e.g., coins and epitaphs depicting average women up to empresses, but you won’t find depictions of goddesses. Each object is tagged with metadata specific to the medium (e.g., portrait bust, coin) to make it easier to search and find what you’re looking for. The goal is to recover lost or non-prominent voices from the past through material culture. See Daniel’s Tweet thread here.

Exciting work going on w. Women in the Roman East (https://t.co/qnwVHK5jTR). Going forward, will include sample lesson plans. Hope to use while TFing Roman civ this semester. #AncMakers #aiascs

— Ryan Pasco (@rympasco) January 6, 20185. Quantitative Criticism Lab (University of Texas - Austin)

Designed by UT-Austin’s Quantitative Criticism Lab, this stylometric tool allows the user to analyze Latin texts from Ennius to Neo-Latin based on twenty-nine stylistic criteria — use of personal pronouns, mean sentence length, and frequency of relative clauses, to name a few. The user can choose any mixture of authors, works, and sections from the tool’s extensive text list, select specific stylistic crtiteria, and receive downloadable data tables and graphs. In the future, the user will be able to load their own texts and compare with items from the database on stylometric grounds. See Daniel’s Tweet thread here and Ryan’s Tweet thread here.

Toolkit analyzes Latin texts spanning from Ennius to Neo Latin using 29 stylometric featres #AncMakers #aiascs pic.twitter.com/CXETfnmBJS

— DLibatique10 (@DLibatique10) January 6, 20186. Pleiades and ToposText

Pleiades is a database of physical sites in the ancient world that gives location information and suggested ancient texts and modern scholarly citations. You can export any number of place citations, up to all 35,459 entries in the database, and represent them pictorally through a GIS reader. Other ways to use it include social network analyses and visualizations of real-life connections, like hostile parties during the Peloponnesian War. Many geographic projects online utilize Pleaides data, like ToposText, which keys a gazeteer of ancient place names to mentions in ancient texts. See Daniel’s Tweet thread here.

H: example given was a "network of hostility" for the Peloponnesian War -- visually representing alliances/campaigns. Full of possibilities! #aiascs #ancmakers

— Ryan Pasco (@rympasco) January 6, 2018